The UK’s communication regulator Ofcom has published its first guidance for technology companies since the introduction of tougher online safety laws last month.

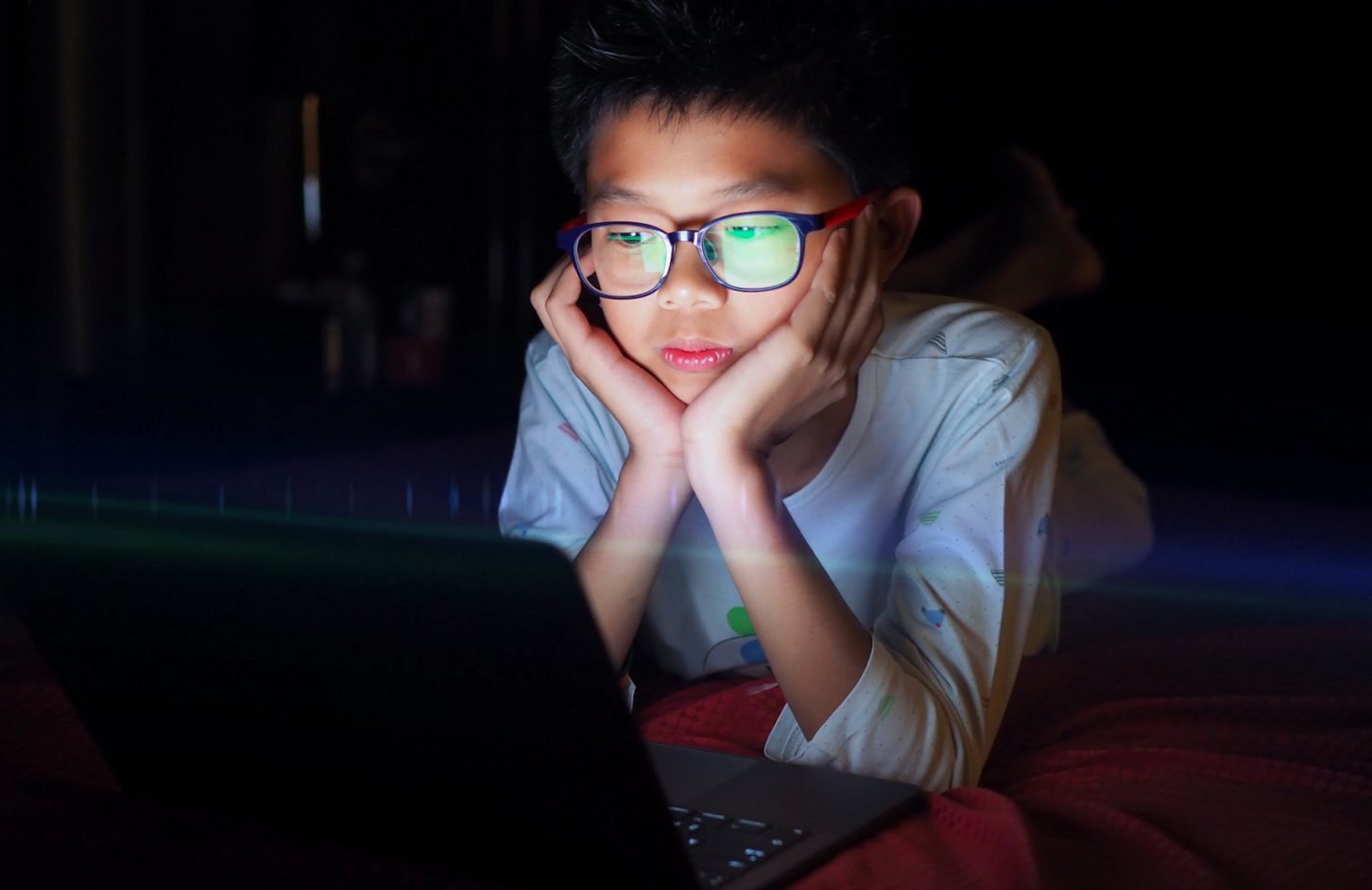

Under the new guidance, children on higher-risk sites would not be given a list of suggested friends or appear within other users’ lists. Their location details will not be viewable by anyone and can only be messaged by those who are connected.

Dame Melanie Dawes, chief executive of Ofcom, said: “Children have told us about the dangers they face, and we’re determined to create a safer life online for young people in particular.”

To combat illegal images of younger people, the regulator is proposing the use of “hash matching”, which compares an image’s digital signature with a database of harmful content and flags when it finds a match.

The technology, which is already used by the largest social media companies, comes after ministers admitted it isn’t “technically feasible” to look for child abuse material in encrypted messages.

It is not possible to use hash matching in end-to-end encrypted messaging services.

Dawes said: “Our figures show that most secondary-school children have been contacted online in a way that potentially makes them feel uncomfortable. For many, it happens repeatedly.”

Ofcom is also considering keyword detection to tackle harmful online content.

Businesses under the draft codes will need to have an individual accountable, dedicated teams, easy reporting and blocking plus safety tests when changing recommendation algorithms.

The Online Safety Bill, which received Royal Assent last month, will allow Ofcom to fine up to £18m or 10% of an offending company’s global revenue.

Final revisions of the guidance are expected to be published in Autumn 2024 before they are put forward for parliamentary approval.

Ofcom says it will suggest further guidance on adult sites this year and produce a consultation on ways to clamp down on the promotion of harmful content, such as self-harm, in the spring.

Read more: It’s not just Big Tech caught in Online Safety Act crosshairs